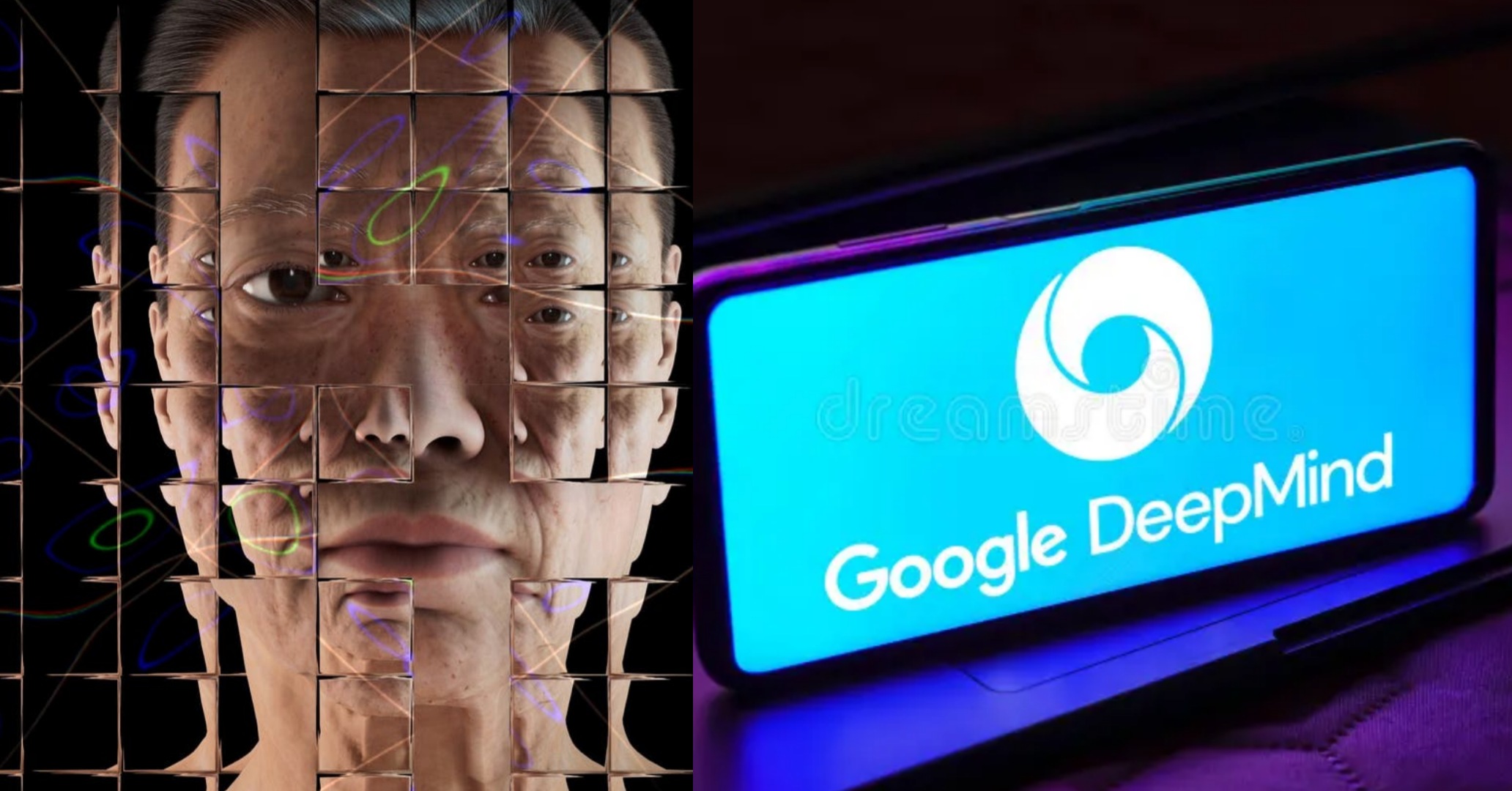

Google is testing a digital watermark that detects images created with artificial intelligence (AI) to combat disinformation.

Developed by Deepmind, Google’s AI division, SynthID identifies machine-generated images.

It embeds changes into individual pixels in an image, making watermarks invisible to the human eye visible to computers.

But DeepMind said it is not “sure enough to handle extreme image manipulation.”

As technology advances, distinguishing between real and artificially generated images is becoming more complex. AI image generators have gone mainstream, with over 14.5 million users of the popular tool Midjourney.

The ability to create images in seconds by entering simple text instructions has raised questions about copyright and ownership around the world.

Google has its own image generator called Imagen and their watermark creation and verification system is only applied to images created with this tool.

A watermark is usually a logo or text added to an image to indicate ownership and sometimes make it difficult to copy or use the image without permission.

These are images used on the BBC News website and usually contain a copyright watermark in the lower left corner. However, such watermarks can be easily edited or cropped, making them unsuitable for identifying Al-generated images.

Technology companies use a technique called hashing to create digital “fingerprints” of known abuse videos, allowing them to be identified and quickly removed as they go viral online. However, these too can be corrupted if the video is cropped or edited.

Google’s system creates a virtually invisible watermark that allows users to use software to instantly verify whether an image is genuine or machine-generated.

Unlike hashes, the company’s software can detect the presence of watermarks even after images are later cropped or edited, he said. “You can change the color, you can change the contrast, you can even resize it… [and DeepMind] can still recognize that it was generated by an AI,” he says. said.

But he warned that this was an “experimental launch” of the system and that the company needs to educate those using the system more about its robustness.

In July, Google, as one of seven artificial intelligence giants, signed a voluntary agreement in the United States to ensure the safe development and use of AI. This includes ensuring that people can recognize computer-generated images through the implementation of watermarks.

Cori said the move reflected those promises, but Claire Liebowitz of the campaign group Partnership on AI said more coordination was needed between the two companies. “I think standardization in this area will help,” she said.

“We have different methods in place and we need to monitor their impact. How can we get better reporting on which methods are working for what purpose?”

“As our information ecosystem relies on different ways of interpreting or rejecting AI-generated content, many institutions are studying different ways, doubling the complexity. has become,” she said.

Microsoft and Amazon, along with Google, are among the major technology companies working to watermark some AI-generated content.

Besides images, Meta has published a research paper on its unpublished video generator Make-A-Video, stating that a watermark will be added to the generated video to meet similar transparency requirements for AI-generated productions. Masu.